Kubernetes (K8s) is a versatile, extensible, open-source platform for overseeing containerized workloads and administrations. It works with declarative configuration setup and has quickly gained prevalence in a developing environment. It's presently seen as the actual de-facto orchestration for containers by many.

This blog takes a deep dive into Kubernetes Storage & its benefits in various aspects.

What are Container Orchestrators?

Container orchestrators provide an automated solution for administration, scaling, provisioning, upkeep, and numerous tasks engaged in containers' deployment. There are a few outstanding container orchestrators with different use cases:

Swarm: An orchestrator straightforwardly coordinated with the Docker Engine and appropriate to cluster where security is the primary concern.

Apache Mesos: A more seasoned orchestrator of containerized and non-containerized workloads, initially created at UC Berkeley and, in some cases, utilized for workloads based on enormous information.

Kubernetes: As of this composition, the business's most generally utilized container orchestrator is the open-source Kubernetes project that emerged from Google in 2014. Initially founded by Google's internalBorgcluster director, Kubernetes has been kept up under the protection of the CNCF beginning around 2015.

Articulated (roughly) "Koo-brr-net-ees" and some of the time abbreviated tok8s("kay-eights" or "kates"), the venture's name gets from the Old Greek κυβερνήτης, and that signifies "commander" or "navigator." On the off chance that we comprehend software containers through the metaphor of a shipping container, we can consider Kubernetes the chief that directs that container where they need to go.

What is Kubernetes?

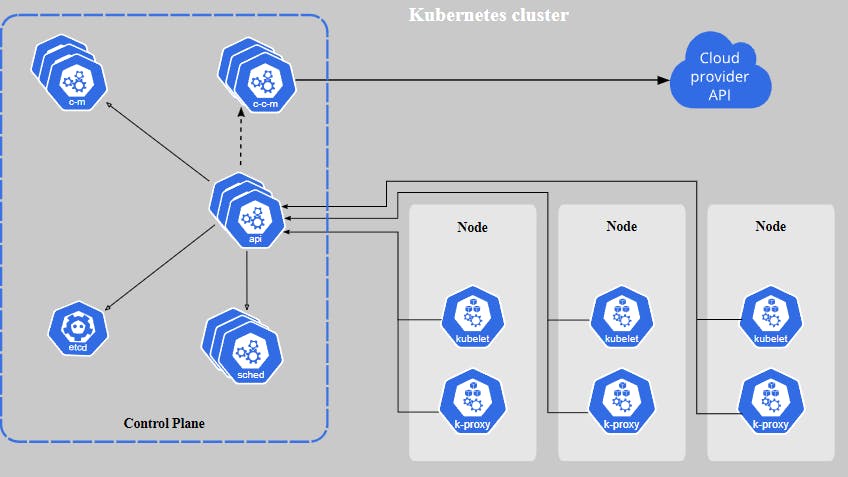

Kubernetes is more than a hierarchical provider of orders — it's likewise the substrate or basic layer that containerized applications run on. In this sense, Kubernetes isn't only the ship skipper yet the actual boat, an independent and possibly gigantic boat like a plane carrying a warship or the starship enterprise. This drifting world could have a wide range of actions.

An association could run handfuls or various applications or administrations on a Kubernetes cluster — a bunch of at least one physical or virtual machine assembled into a coordinated software substrate by Kubernetes. In this sense, Kubernetes is sometimes perceived as a working system for the cloud.

Why Kubernetes?

Kubernetes give the framework to versatilely run distributed system frameworks. It deals with scaling and failover for apps, gives deployment patterns, and that's only the tip of the iceberg. It furnishes you with the accompanying:

Administration disclosure and load balancing

Application deployment and mechanization

Robotized rollouts and rollbacks

Programmed pic packing

Self-healing

Who runs Kubernetes?

Kubernetes is designed to run any enterprise applications at scale, and it has accomplished far and wide receiver for this reason. The Cloud Native Foundation's2021 Cloud Survey found that 96% of associations among its respondents were either utilizing or assessing Kubernetes.

Inside those associations, there are, by and large, two personas who collaborate with Kubernetes: developers and operators. These two jobs have various needs, necessities, and use designs concerning Kubernetes:

Developers need to have the option to get to assets on a Kubernetes cluster, fabricate applications for a Kubernetes climate, and run those applications on the group/cluster.

All operators need to have the option to oversee and screen the cluster and its attendant foundation, including capacity and systems administration contemplations.

There are a few cross-overs between the abilities and information that every one of these personas will require. Undoubtedly, a similar individual could satisfy both roles under certain conditions. Designers and operators need an essential comprehension of the anatomy of a Kubernetes cluster.

Storage orchestration for Kubernetes

Kubernetes allows users to automatically mount a storage system of choice, such as local storage, public cloud providers, and more. You still need to provide the underlying storage system. Kubernetes permits clients/users to naturally mount a capacity arrangement of decisions, like local storage and public cloud suppliers, and that's just the beginning.

However, practically all creation applications are stateful; for example, they require outside storage. To run applications that require persevered capacity inside containers, a layer is expected to give persistent capacity to those containers, free of the lifecycle of the actual containers.

Kubernetes provides an API to clients/users and directors that digests subtleties of how storage is given from how things are consumed. Suppose you're new to coordinating persistent storage with Kubernetes.

In that case, there are a couple of terms to comprehend, such as

Container Storage Interface (CSI): This determination permits a normalized way for all container orchestrators to interface with capacity arrangements like Ondat. Before CSI was delivered in Kubernetes, storage suppliers needed to compose their integration layer straightforwardly into the Kubernetes source code, this made updates troublesome and tedious, as any bugs could crash Kubernetes.

Storage Class(SC): A Kubernetes Stockpiling Class is a way for administrators to pre-characterize the kinds of capacity Kubernetes clients/users want to arrange and join their applications.

Persistent Volume (PV): Relentless Volume (PV): A Persevering volume is a virtual storage that is added as a volume to the group. The PV can highlight either actual capacity equipment or programming-characterized capacity like Ondat.

Persistent Volume Claim (PVC): PVC is a request to provide or arrange a particular configuration, type, and storage setup.

With software characterized capacity like Ondat incorporated with Kubernetes, it's conceivable to utilize Kubernetes to powerfully arrange capacity assets and process assets, giving clients the very nimbleness and versatility for capacity that Kubernetes as of now accommodates for computing.

Kubernetes Storage Best Practices

Check out the details of some significant changes. And what it means for Kubernetes users.

Kubernetes Volumes Settings

The PV life cycle is autonomous of a specific container in the cluster. And PVC is a solicitation made by container users or applications for particular storage. While making a PV, Kubernetes documentation suggests the accompanying:

Continuously remember PVCs for the container design configuration.

Never remember PVs for container design configuration — this will be several containers to a particular volume.

Constantly have a default StorageClass, any other way PVCs that don't determine a specific class will come up short.

Give StorageClasses notable names.

Limiting Storage Resource Consumption

It is encouraged as far as possible on container utilization of capacity to mirror how much power is accessible in the neighborhood data server or the financial plan accessible for cloud storage assets. There are two principal ways of restricting storage utilization by containers:

Asset Quantities — limits how much assets, including capacity, computer chip, and memory, can be utilized by all containers inside a Kubernetes namespace.

StorageClasses — A StorageClass can restrict how much capacity is provisioned to containers because of a PVC.

Resource Requests and Limits

Kubernetes gives asset solicitations and restrictions that assist you with overseeing asset utilization, taking into account individual containers. An asset cutoff can be indicated for temporary capacity. Drawing asset demands and limits can help keep containers from being compelled by asset shortage on the container host or taking up such a large number of assets out of the blue.

Kubernetes Storage with Cloudian

Containerized applications require deft and adaptable capacity. The Cloudian Kubernetes S3 Administrator allows you to get exabyte-adaptable Cloudian storage from your Kubernetes-based applications. Based on the S3 Programming interface, Cloudian lets you progressively or statically arrange object capacity with this lightweight administrator utilizing S3 APIs. You get cloud-like capacity access to your data server.

Cloudian's major features for Kubernetes storage include the following:

S3 API for Application Portability: It eliminates lock-in and upgrades application convey ability. Gives quick, self-serve capacity access utilizing the standard Kubernetes PV and PVC technique to arrange resources.

Multi-tenure for Shared Capacity: It allows you to make separate namespaces and self-serve the executives' conditions for improving and creating clients. Each occupant's current circumstance is separated, with information undetectable to different inhabitants. Execution can be dealt with coordinated quality of Service (QoS) controls.

Hybrid Cloud-Empowered: It is simple to repeat or move information to AWS, GCP, or Azure. The information in the cloud is constantly put away in cloud-native format, which is straightforwardly open to cloud-based applications with no lock-in.

What's New in K8s 1.23?

The last update of 2021 carried out a few significant changes to how Kubernetes functions. Kubernetes 1.23 carried 47 upgrades to Kubernetes, including a large group of stable improvements, various elements moving into beta, a few all-new alpha highlights, and one remarkable deprecation.

That's it!!! I hope you like the above pieces of information.

Wrapping Up!

Hence, Kubernetes is the ability to run workloads belonging to various entities so that each workload is segregated from the others. It is becoming an essential and trending topic as various organizations use Kubernetes on a larger scale. So, we need to learn more about achieving soft and hard multi-tenancy in Kubernetes, cost, and security considerations for success.

Identifying the needs, designing the system, or picking the tools is essential. Storage in the cloud-native environment is similar to this. While the problem is quite complicated, numerous tools and approaches exist. As the cloud-native world progresses, new solutions will undoubtedly emerge, and we will update you as soon as we get any info.

If you have any queries, questions, or suggestions? Drop them in the comment section or contact us now! Thanks!!!